Samsung’s Tiny AI Model Challenges DeepSeek and Gemini on Reasoning | Is It True?

How can a tiny Samsung AI model compete with massive AI systems like DeepSeek R1 and Gemini 2.5 Pro when it comes to reasoning?

The answer is simple, but powerful: Samsung changed how the model thinks, not how big it is.

Instead of following the industry’s obsession with scale, Samsung focused on reasoning quality. And that decision is why this research is now being talked about across the AI world.

The big idea in one line

In late 2025, Samsung Research (SAIL Montréal) showed that the Tiny Recursive Model (TRM) demonstrates that better reasoning does not always require a bigger AI model.

That single idea challenges years of assumptions in artificial intelligence and explains why this tiny model is getting attention in 2026.

Why “bigger AI” started to hit a limit

Most modern AI systems are built as large language models. They are trained to predict the next word with extreme accuracy, which makes them great at writing, explaining, and summarising.

But reasoning is different.

Reasoning requires an AI system to:

- Pause instead of rushing

- Question its own logic

- Fix mistakes before moving on

Many large models struggle here because once they choose a path, they rarely step back. This is a known weakness in transformer-based AI systems and a major reason why AI reasoning remains inconsistent.

Samsung’s research starts with this problem.

What is Samsung’s TRM (Tiny Recursive Model)?

Samsung’s new TRM AI model stands for Tiny Recursive Model, and it is intentionally small.

Here’s what defines this Samsung AI model:

- Around 7 million parameters

- Trained on roughly 1,000 carefully selected examples

- Designed only for logical and abstract reasoning

- Built to be efficient, not conversational

To be clear, this is not a chatbot and not a consumer AI product. It is a reasoning-focused research model, which is why it belongs in discussions about #SamsungAIModel and #AIReasoning rather than general chat AI.

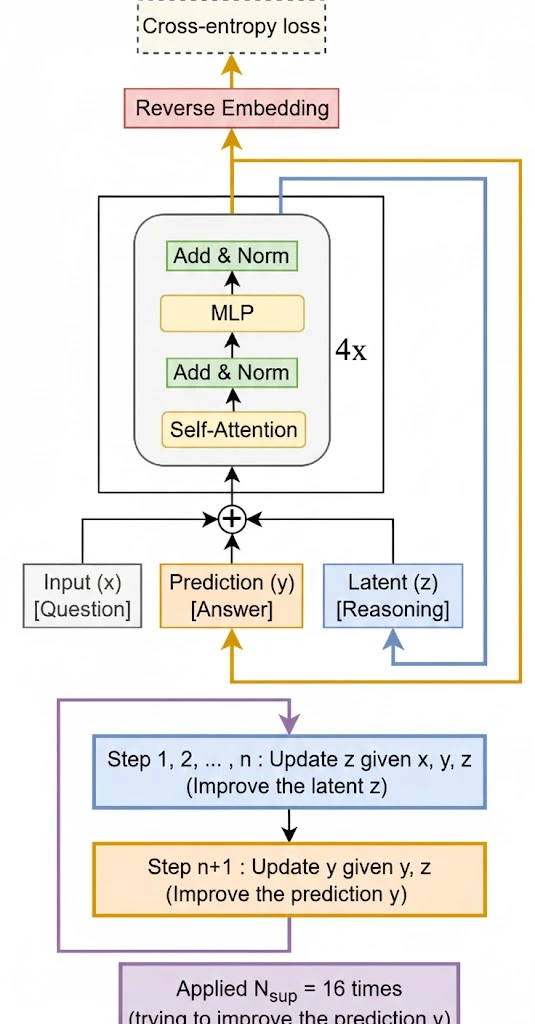

How TRM thinks differently (the key innovation)

Instead of producing answers once and moving forward, TRM uses recursive reasoning.

In simple terms, it works like this:

- It produces an initial answer

- It checks whether the logic makes sense

- It looks for errors or weak assumptions

- It corrects itself

- It repeats this process until the reasoning improves

This self-correction loop is what makes TRM different from most large AI models. Instead of guessing better words, it reasons better.

That’s why researchers describe it as a reasoning-first AI model.

Why ARC-AGI is where things got interesting

The reason TRM is being compared with giant AI systems comes from its performance on ARC-AGI (Abstraction and Reasoning Corpus).

ARC-AGI is designed to test:

- Pattern discovery

- Abstract logic

- Problem-solving with very few examples

These tasks cannot be solved by memorisation. Even very large models often fail here.

On some ARC-AGI reasoning tasks, Samsung’s TRM achieved competitive or stronger results than much larger models, including those from DeepSeek and Google’s Gemini.

That is the exact context behind the phrase “challenges DeepSeek and Gemini on reasoning.”

Does this mean Samsung beat DeepSeek and Gemini?

No, and this distinction is important.

Large AI models still win at:

- Language fluency

- Writing and summarisation

- General knowledge

But when the task is pure reasoning, the comparison changes.

Here’s what you really need to know:

Samsung TRM

- Very small and efficient

- Strong at logic and reasoning

- Low compute and energy cost

- Limited language ability

Large AI models

- Extremely powerful in language

- Massive training data

- Inconsistent reasoning on abstract tasks

- Very expensive to run

This is why discussions around #SmallAIModels and #EfficientAI are becoming serious, not theoretical.

Why experts care about this result

Researchers see TRM as a signal that the AI industry may be entering a post-scaling phase, where:

- Bigger models alone are not enough

- Architecture matters more

- Reasoning deserves dedicated design

Many experts now expect future AI systems to combine:

- Large models for language and context

- Small reasoning models for logic, verification, and error checking

This hybrid approach could improve reliability and reduce hallucinations, one of the biggest challenges in #ArtificialIntelligence today.

Why this fits Samsung’s long-term AI strategy

Samsung builds chips, devices, and embedded systems. For that ecosystem, tiny reasoning-focused AI models are far more practical than massive cloud-only systems.

They are ideal for:

- On-device intelligence

- Edge computing

- Robotics and automation

- Privacy-sensitive environments

This makes TRM a strategic research move, not a publicity experiment, and explains why it aligns closely with #EdgeAI and #OnDeviceAI.

What this tells us about AI in 2026

AI progress in 2026 is no longer just about size. It’s about:

- How models reason

- How efficiently they run

- How reliably they correct mistakes

Samsung’s Tiny Recursive Model shows that thinking better can sometimes matter more than thinking bigger.

Also Read: China’s AI Cold War 2025 Arsenal From DeepSeek to ERNIE 4.5 Challenges Western Hegemony

Final takeaway

Samsung’s TRM does not replace DeepSeek or Gemini, and it was never meant to. What it does is challenge a core assumption in AI development.

By proving that a tiny Samsung AI model can reason effectively on complex tasks, this research points toward a smarter, more efficient future for artificial intelligence.

That is why this model matters and why it deserves attention well beyond 2026.

FAQs

What is Samsung’s new AI model?

Samsung’s new AI model is called TRM (Tiny Recursive Model). It is a research-focused model designed to improve logical reasoning using a small number of parameters rather than a massive scale.

How is Samsung’s AI model different from ChatGPT or Gemini?

Unlike chat-based models such as Gemini or ChatGPT, Samsung’s TRM does not focus on conversation or writing. It focuses on reasoning and self-correction, making it better suited for logic-heavy tasks.

Can a small AI model really compete with large language models?

Yes, in specific areas. While small AI models cannot match large language models in language fluency or general knowledge, they can compete on reasoning benchmarks where logic and error correction matter more than size.

Why is Samsung’s AI model compared with DeepSeek and Gemini?

Samsung’s TRM is compared with DeepSeek and Gemini because it showed competitive reasoning performance on benchmarks like ARC-AGI, despite being much smaller and more efficient.

What is ARC-AGI and why is it important?

ARC-AGI is a reasoning benchmark that tests abstract thinking and pattern recognition. It is important because it reveals whether an AI model can reason, not just memorise or predict text.

Does Samsung’s AI model outperform DeepSeek and Gemini?

No, not overall. Samsung’s TRM performs better only in specific reasoning tasks. Large models still outperform it in language, creativity, and general knowledge.

Why are AI researchers interested in small AI models?

Researchers are interested because small AI models are:

1. More efficient

2. Cheaper to run

3. Easier to deploy on devices

4. Better suited for edge and on-device AI

This aligns with the growing focus on #EfficientAI.

What does recursive reasoning mean in AI?

Recursive reasoning means the AI model checks and corrects its own logic multiple times instead of producing a single, fixed answer. This helps reduce logical errors.

Is Samsung’s AI model meant for consumers?

No. TRM is a research model, not a consumer product. It is designed to explore new AI architectures rather than replace existing chatbots.

What does this mean for the future of AI?

Samsung’s research suggests that future AI systems may combine:

1. Large models for language and knowledge

2. Small models for reasoning and verification

Similar Posts

Measuring What Matters: Brand Sentiment Analysis Powered by Next-Generation Media Monitoring

How Integration-First Thinking Reduces Long-Term IT Costs

How Do Online Unblocked Car Games Redefine Virtual Racing?