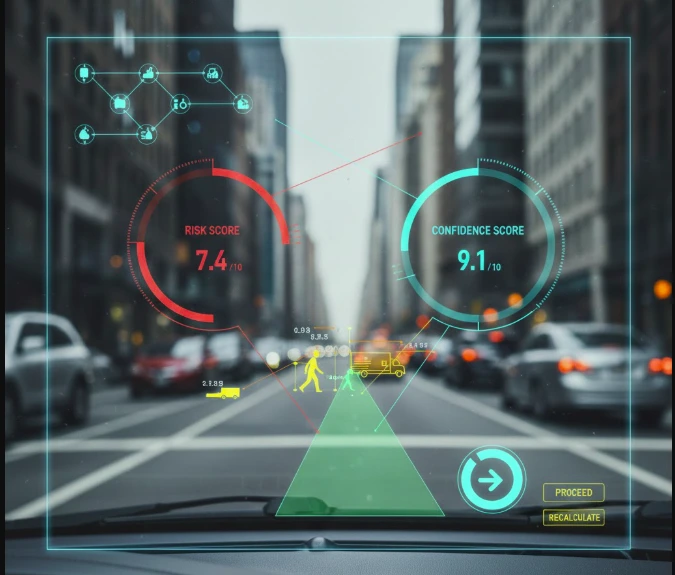

AI Weighs Risk and Confidence Scores in Real-World Decisions

When an AI system makes a decision, it is not choosing between “right” and “wrong” the way a human does.

Instead, it is calculating risk and confidence.

Every approval, rejection, alert, or recommendation produced by AI comes from a numerical process that estimates how likely an outcome is and how certain the system feels about that estimate. Understanding this process is critical if you want to trust AI systems, audit them, or use them in high-stakes environments.

This article explains how AI weighs risk and confidence scores, what those numbers actually mean, and why they matter more than raw accuracy in 2026.

What Risk and Confidence Mean in AI Systems

In AI decision systems, risk and confidence are not opinions. They are calculated values derived from data patterns.

- Risk score estimates the likelihood of an undesirable outcome

- Confidence score estimates how certain the model is about its prediction

An AI system does not say “this is fraud.”

It says something closer to:

“There is a 72% probability this behavior matches known fraud patterns, with a confidence level of 0.81.”

These numbers guide what happens next.

How Machine Learning Models Calculate Risk

Machine learning models learn from historical data. During training, they observe patterns linked to past outcomes.

For example:

- Fraudulent vs legitimate transactions

- Successful vs defaulted loans

- Safe vs unsafe behavior

When new data arrives, the model compares it against learned patterns and produces a risk probability.

This process is central to rule-based and machine learning decision systems:

- Rule-based systems trigger actions using fixed thresholds

- Machine learning systems estimate probability first, then act

Risk scoring allows AI to operate in uncertain environments where fixed rules would fail.

What Confidence Scores Actually Represent

A confidence score does not mean the decision is correct.

It means the model is internally consistent with what it has seen before.

High confidence usually occurs when:

- The input data closely matches the training data

- Patterns are clear and frequent

- Uncertainty is low

Low confidence occurs when:

- The data is incomplete

- Patterns are rare

- Inputs fall outside expected ranges

This is why confidence scores are critical for human-in-the-loop AI decision making.

Risk vs Confidence: They Are Not the Same

| Aspect | Risk Score | Confidence Score |

| What it measures | Likelihood of an outcome | Certainty of the prediction |

| Based on | Pattern probability | Model stability |

| Can be high when wrong | Yes | Yes |

| Used for | Action thresholds | Escalation decisions |

A system can show:

- High risk + low confidence → escalate to human

- Low risk + high confidence → automate safely

This distinction is often misunderstood.

Why AI Systems Rarely Use Confidence Alone

In real deployments, decisions are not made on confidence scores alone.

Most systems combine:

- Risk score

- Confidence score

- Rule-based thresholds

For example:

- Approve automatically if risk < 10% and confidence > 80%

- lag for review if risk is moderate, but confidence is low

- Block immediately ifthe risk exceeds a hard rule

This hybrid approach explains why rule based vs machine learning decision systems coexist instead of replacing each other.

How Risk Thresholds Are Set in Practice

Risk thresholds are not universal. They are business decisions.

Factors that influence thresholds include:

- Regulatory exposure

- Financial loss tolerance

- Safety implications

- User trust requirements

In healthcare and finance, thresholds are conservative.

In marketing or personalization, thresholds are more flexible.

This is why the same AI model can behave very differently across industries.

Confidence Scores and Explainable AI

Confidence scores play a major role in explainable AI decision systems.

They help:

- Justify why automation was allowed

- Identify when human review was necessary

- Detect data drift over time

If confidence steadily drops, it often signals that:

- User behavior has changed

- The training data is outdated

- Model retraining is required

This makes confidence tracking a governance tool, not just a technical metric.

Where Risk and Confidence Go Wrong

AI failures often happen when:

- Confidence is mistaken for correctness

- The thresholds are set too aggressively

- Humans are removed entirely from review loops

High confidence does not guarantee ethical, fair, or safe outcomes.

It only reflects mathematical certainty, not moral judgment.

This is why AI decision systems fail when deployed without oversight.

Why Humans Still Matter in AI Decisions

Risk and confidence scores support decisions, but they do not replace responsibility.

Humans are still needed to:

- Interpret edge cases

- Override automation

- Handle ambiguous outcomes

In 2026, the most reliable AI systems are not fully autonomous.

They are guided systems, where numbers inform decisions but do not control them outright.

How This Fits Into Modern AI Decision Systems

Most enterprise AI systems now follow this structure:

- Machine learning calculates risk

- Confidence score measures certainty

- Rules define safety boundaries

- Humans review exceptions

This layered approach balances speed, accuracy, and accountability.

Final Takeaway

AI does not “decide” the way humans do.

It weighs risk, measures confidence, and follows constraints.

Understanding how these scores work is essential if you want to trust AI, audit its behavior, or deploy it responsibly. In 2026, the smartest AI systems are not the ones that act fastest, but the ones that know when not to act.

FAQs

How does AI calculate risk scores?

AI calculates risk by comparing new data to patterns learned from historical examples and estimating outcome probability.

What is a confidence score in AI?

A confidence score measures how certain the model is about its prediction, not whether it is correct.

Can AI make decisions with low confidence?

Yes, but responsible systems escalate low-confidence decisions to human review instead of automating them.

Are confidence scores reliable?

They are reliable indicators of model certainty, but not guarantees of correctness.

Why are risk and confidence used together?

Using both helps systems balance automation speed with safety and oversight.

Similar Posts

Top 9 Latest Technology Innovations in the Education Sector

7 Pros and Cons of AI in the Workplace and Education

Why Staying Updated With the Latest Technology Trends Matters Today